The future of artificial intelligence is currently facing a massive power crisis, but a solution is emerging from the field of neuromorphic computing. Spiking Neural Networks, often referred to as the ‘third generation’ of neural networks, are redefining energy efficiency by mimicking the way biological neurons communicate. Unlike traditional AI models that consume constant power, spiking neural networks only fire when they receive specific sensory input, making them the most promising technology for the next era of edge computing and sustainable AI.

1. The Energy Crisis: Why Traditional AI is Hitting a Wall

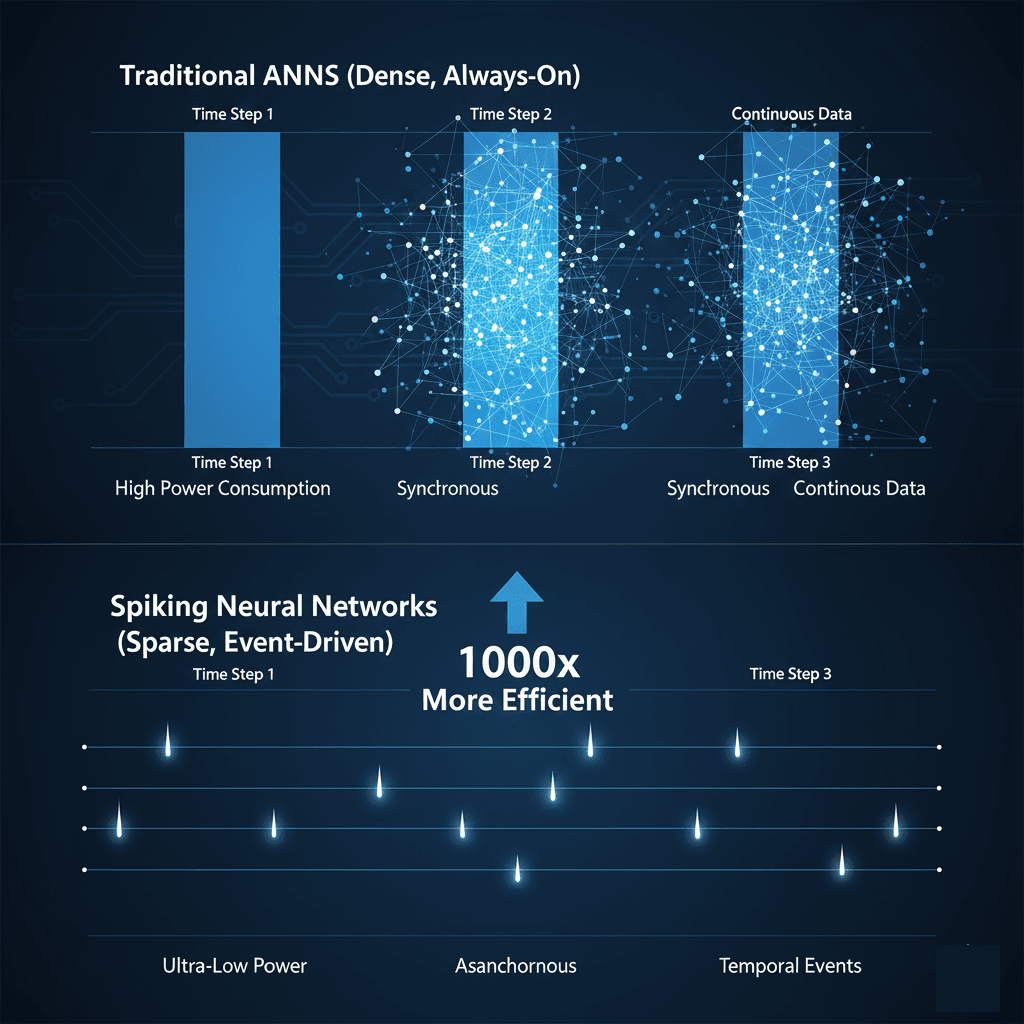

To understand why Spiking Neural Networks are the future, we have to look at the flaw in the present. Traditional Artificial Neural Networks (ANNs), like the ones powering ChatGPT, are “always on.” Even if the AI is waiting for your input, the mathematical weights are being calculated across millions of parameters. This is known as Dense Computation.

It is incredibly power-hungry. As we move toward 2026, the industry is pivoting toward sustainability. According to recent research on Brain-Inspired Computing, SNNs offer a brain-inspired alternative that could reduce energy consumption by up to 1,000x compared to conventional ANNs. You can get the complete paper in pdf format here: Spiking Neural Networks: The Future of Brain-Inspired Computing

2. What Exactly Are Spiking Neural Networks?

The human brain is the most efficient computer in existence. It runs on about 20 watts—less than a dim lightbulb—yet it outperforms supercomputers in real-time pattern recognition.

How does it do it? It uses “Spikes.”

In your brain, neurons don’t send a constant stream of information. They sit quietly until they receive enough stimulus to reach a “threshold.” Then, they fire a quick burst of electricity a spike and go silent again. Spiking Neural Networks mimic this exactly. Instead of continuous numerical values, they communicate through discrete events in time.

The Motion-Sensor Analogy: Think of a traditional AI like a lightbulb that stays on 24/7. Spiking Neural Networks are like a motion-sensor light. They only “fire” when there is relevant data to process. No movement? No energy used.

3. Why Spiking Neural Networks Changes Everything for “Edge AI”

We believe the next decade of tech will be defined by Edge AI—intelligence that lives on your device, not in a cloud data center. SNNs are the key to this transition for three main reasons:

Extreme Energy Efficiency

Because SNNs are “event-driven,” they only consume power when data changes. In a surveillance camera, if nothing is moving, an SNN-based chip uses almost zero power. This allows for AI-powered devices that can run for years on a single coin-cell battery.

Spiking Neural Networks have Near-Zero Latency

Traditional AI processes data in “batches.” SNNs process information as it arrives. For a self-driving car, this is the difference between “calculating” a stop and “reacting” to a pedestrian in real-time.

Continuous Learning (The Holy Grail)

Most AI today is “static.” Once it’s trained, it’s frozen. To teach it something new, you have to retrain it. SNNs utilize a biological concept called STDP (Spike-Timing-Dependent Plasticity). This allows the hardware to adjust its “synapses” on the fly, learning from new data without needing to be connected to a server.

4. Neuromorphic Hardware: Who is Leading the Charge?

We are finally moving past the research phase. Major players are now releasing “Brain Chips” that are commercially viable:

- Intel Loihi 2: A research chip that has already demonstrated 10x faster processing than traditional CPUs for robotic arm control.

- BrainChip Akida: The world’s first commercial neuromorphic processor, designed for sensors that need to recognize voices or vibrations at the “edge.”

- NICE 2026: The upcoming Neuro-Inspired Computational Elements conference is set to showcase how these chips are being integrated into consumer electronics.

5. Challenges to Widespread Adoption

If Spiking Neural Networks are so efficient, why aren’t they everywhere yet?

- Software Gap: Most AI software (PyTorch, TensorFlow) was built for GPUs. Writing code for SNNs requires a different “temporal” mindset.

- Training Complexity: Training these networks is mathematically harder because “spikes” are discrete, making them difficult for standard optimization algorithms to handle.

6. Conclusion: The Future is Spiking

We are transitioning from the “Brute Force” era of AI to the “Efficiency” era. While the world is distracted by who has the most GPUs, the real innovators are looking at how to do more with less.

Spiking Neural Networks are the bridge that will take AI out of massive, hot data centers and put it into every low configuration device around us, making them truly intelligent, private, and sustainable.

More blogs for readers like you:

Read about Google Antigravity

Read about Quantum Era